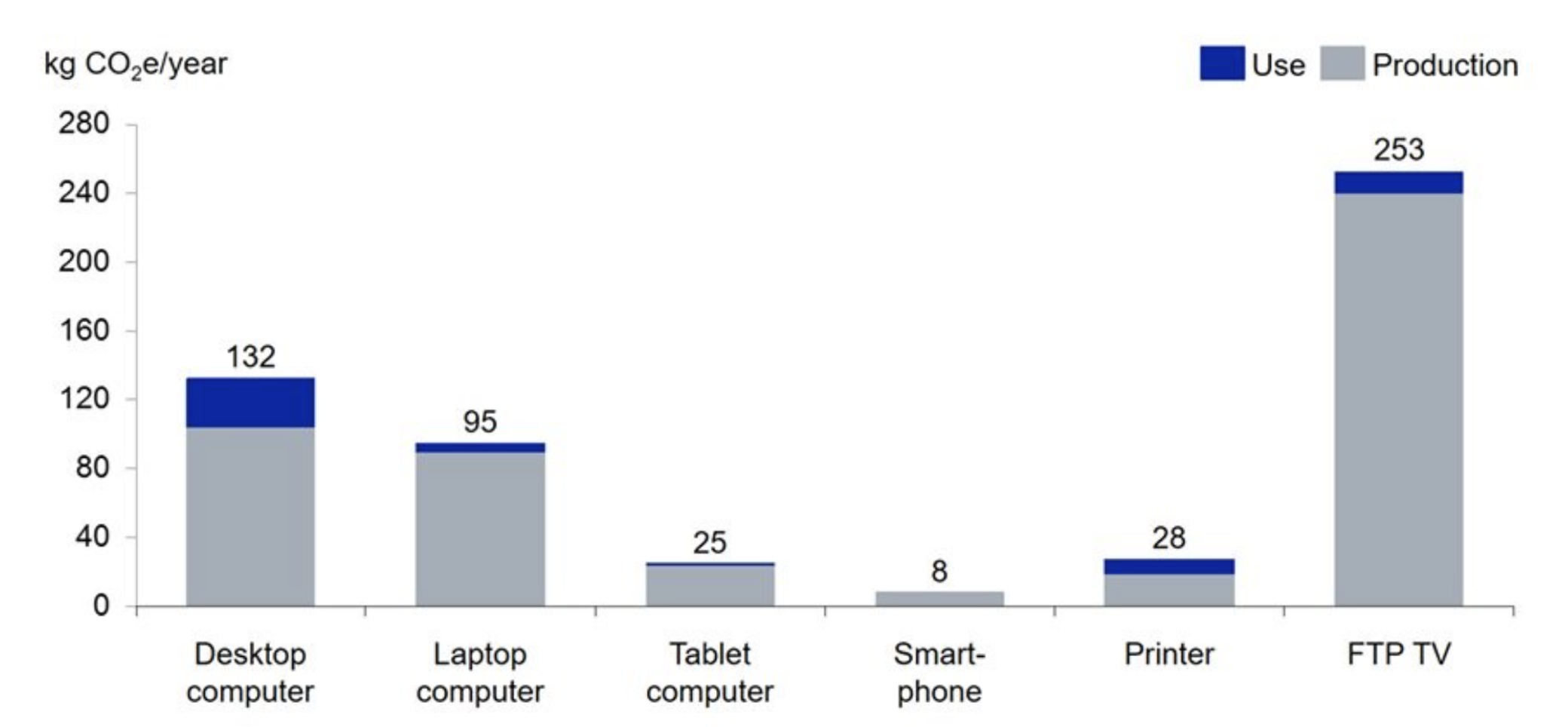

“Our digital lives are responsible for high CO2 emissions. They are mainly generated during the manufacture of our devices and the operation of data centres.”

Methodology

the laptop hub focuses on promoting sustainable laptop purchasing practices among students at the University of Zurich. Many students lack awareness of the environmental impact of their digital devices, often buying laptops that do not match the academic needs of their study programs and have to be replaced more often.

The production phase of laptops has a significant environmental impact due to the energy-intensive manufacturing process, global transportation, etc. Studies (Hilty, Lorenz, Bieser, Jan, 2017) indicate that production accounts for the largest share of a laptop’s carbon footprint compared to its energy consumption during use.

Figure 1: average greenhouse gas emissions per end-user device during production and use by device type. Hilty, Lorenz; Bieser, Jan (2017). Opportunities and Risks of Digitalization for Climate Protection in Switzerland. Zurich: University of Zurich.

Since the energy consumption during operation is relatively low, the lifespan of the device plays a crucial role in determining its environmental impact. The most sustainable laptop is the laptop you don’t buy, so the longer a laptop is used, the more its initial production emissions are distributed over time, reducing its per-year carbon footprint.

By making informed purchasing decisions and prioritizing durable, repairable devices, we can significantly contribute to a more sustainable approach to technology utilization. To address this, we use a research-driven approach to guide students’ choices.

Research Approach

We observed student purchasing behaviours and analysed the computational requirements of different study programs to provide tailored recommendations. In particular, we reached students from five study programs: Informatics, Banking & Finance, Business Administration, Economics, and Communication Sciences.

Our methodology includes:

- Surveys: we conducted structured surveys targeting current UZH students to gather data on laptop purchasing behaviours, awareness of sustainability practices, device usage patterns, and replacement frequency. The survey design follows standard social research practices to ensure representativity.

- Curriculum-based analysis: to identify device needs across disciplines, we analysed laptop requirements provided by various academic coordinators. This enabled us to provide the technical requirements tailored to different fields of study.

- Scoring model: our evaluation framework consists of three distinct scores designed to guide incoming students toward optimal laptop choices. Based on quantitative surveys, we built the Student Recommendation score; based on the metrics provided by recognized ecolabels (iFixit, EPEAT, TCO Certified), we built the Sustainability score; finally, based on the laptop requirements received from each study programs, we could state whether a laptop fulfil the requirements or not.

Students Recommendation Score

Purpose: Quantify how likely students are to recommend their current laptop to peers, reflecting real-world satisfaction and perceived value.

Requirement Score

Purpose: Evaluate how well a laptop meets the technical and practical needs of a student’s study program.

Sustainability Score

As mentioned before, the most sustainable laptop is the laptop you don’t buy, so the most important step is prolonging laptop’s life. For this reason, our score prioritizes repairability while also considering sustainability criteria based on these certifications and tools.

- EPEAT is a global assessment tool that covers lifecycle impacts. Rating system: bronze, silver, gold.

- TCO Certified targets issues in four key areas: climate, substances, circularity and supply chain

- iFixit Repairability Score directly measures how easy a laptop is to repair

Study Program Clustering and Device Recommendation Logic

To enable meaningful and academically grounded laptop recommendations, this approach established a process of clustering student study programs into functionally similar academic fields. This clustering serves as the foundational layer upon which all subsequent score calculations—both for recommendation and sustainability—are based. By grouping programs with overlapping academic demands, device usage patterns, and software needs, the analysis was able to assign differential weightings to the scoring dimensions in a consistent and theoretically sound manner.

Programs were classified into ten clusters based on their curriculum structure, technological intensity, and the nature of student tasks. For example, programs like Artificial Intelligence, Data Science, and Informatics were placed into technical clusters due to their high-performance computing demands, frequent use of coding environments, and compatibility requirements with Unix-based systems. In contrast, disciplines such as Business Administration and Political Science were grouped into portability-oriented clusters, where long battery life, lightweight form factors, and mobile usability were paramount.

This clustering approach ensured that the calculation of Recommendation Scores could reflect the actual conditions of academic work. It allowed for dynamic weighting: performance-heavy fields received proportionally higher weight on hardware specifications such as CPU and RAM, while clusters with less computational need received greater emphasis on portability and user experience. Similarly, Software Compatibility and Price were scaled based on what students in each cluster identified as critical through survey responses.

Furthermore, the clustering made it possible to apply differentiated logic in calculating Sustainability Scores, where factors such as repairability and energy efficiency mattered more in fields with high device longevity expectations, such as Informatics or Communication Science.

By embedding cluster logic into the score calculation framework, this methodology avoids the one-size-fits-all pitfall common in consumer technology recommendations. Instead, it offers a nuanced system where each recommendation is grounded in the educational context and usage profile of the student population it aims to serve.

How do we calculate our scores?

Students Recommendation Score

The core of the recommendation framework is the dynamic computation of the Recommendation Score, which was developed to reflect both the technical specifications of laptops and the actual usage preferences expressed by students. This score is composed of four primary components: Performance, Portability, Software Compatibility, and Price, with each factor assigned a weight specific to the academic cluster it serves. These weightings were not arbitrarily assigned but instead informed by responses from the student survey, which captured not only the attributes students deemed important in their device choice but also their satisfaction with current laptops.

To ensure the Recommendation Score captures the heterogeneity of academic demands, a cluster-specific weighting scheme was applied. For instance, students in highly technical programs such as Artificial Intelligence and Informatics consistently reported the need for high computational performance, resulting in a 50% weighting on Performance. Conversely, students in mobility-intensive fields like Political Science and Business Administration expressed a high priority for battery life and portability, leading to a 50% weighting on the Portability dimension.

The process also incorporated a Satisfaction Influence mechanism, wherein devices that received higher satisfaction ratings in the survey were granted a modest uplift in their final Recommendation Score. This reflects the recognition that performance on paper may not always align with user experience; thus, positive real-world usage feedback is a valuable indicator of appropriateness.

Another dynamic adjustment involved usage-based importance scaling. For example, in clusters where over 90% of students rated battery life as a top concern, the weight for Portability was boosted accordingly. Similarly, when survey data indicated that certain software tools (e.g., statistical packages or design suites) were critical in a given field, the Software Compatibility component was correspondingly emphasized.

The Recommendation Score calculation therefore emerged as a weighted composite score, formalized as follows:

Where w1 ,w2 ,w3 ,w4 are the cluster-specific weights summing to 1. These weights were derived through empirical analysis of the survey’s quantitative results and interpreted through the lens of academic task demands.

Ultimately, this scoring mechanism allowed the study to make laptop recommendations that were not only technically grounded but also user-aligned, incorporating both objective performance metrics and the subjective but equally vital lived experience of students across disciplines.

Sustainability Score

Our methodology is grounded in the principle that the most sustainable laptop is the laptop you use the longest. Therefore, our score prioritizes repairability, while also considering broader sustainability criteria.

The sustainable scoring system integrates three established frameworks:

- iFixit Repairability Score (Repair Manuals for Every Thing – iFixit): this score assesses the ease of disassembly, availability of replacement parts, accessibility of repair documentation, and the use of non-proprietary tools. Devices are rated on a scale from 1 to 10, with higher scores indicating greater repairability. For instance, a device scoring 10/10 is considered highly repairable, with comprehensive repair guides and modular components. On the other hand, a device scoring 1/10 is very difficult to repair, with many of its components not available.

- EPEAT (Electronic Product Environmental Assessment Tool) (Computers & Displays Searching | EPEAT Registry): as a Type 1 ecolabel managed by the Global Electronics Council, it evaluates the environmental impact of electronic products throughout their lifecycle, including design, production, energy use. Products are rated Bronze, Silver or Gold based on the number of optional criteria they meet beyond the required one. These criteria consider material selection, product longevity and energy conservation.

- TCO Certified (Search for certified product models in Product Finder): This certification focuses on sustainability in IT products, addressing areas such as climate impact, hazardous substances, circular product design, and responsible supply chains. For a product to be certified, it must meet all criteria.

In our scoring model, we assign the highest weight to the iFixit Repairability Score (40%) to stress the importance of product longevity through repairability. EPEAT (30%) and TCO Certified (30%) ratings provide additional context regarding the environmental and social impacts of products, attributes that are considered important also by students, as emerged from the survey results (repairability, the use of recycled materials, energy efficiency, and brand ethics).

Requirement fulfilment

This check verifies whether the conditions specified by the programme coordinator for the laptop are met. If this is the case, a green tick is displayed.